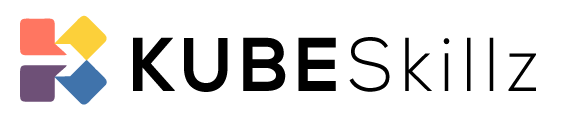

In a cloud-native environment, managing multiple Google Kubernetes Engine (GKE) clusters is common, especially when different parts of an application need to be isolated. However, these isolated clusters might still need to access a central database like Google Cloud SQL (PostgreSQL). In this blog post, we’ll guide you through the process of connecting multiple GKE VPC-native clusters to a single Cloud SQL instance, even when they reside in separate VPC networks.

The Challenge

Imagine you have several GKE clusters, each operating in its own Virtual Private Cloud (VPC) network, for reasons of security and isolation. On the other hand, you have a Cloud SQL instance (PostgreSQL) that serves as the central repository for your application’s data. The challenge is to enable seamless connectivity between any of the GKE clusters and the shared Cloud SQL instance, maintaining both network isolation and data security.

The Solution

To address this challenge, we will outline the steps to establish a secure and efficient connection between your GKE VPC-native clusters and the Cloud SQL database, regardless of the VPC network they are in.

- Dedicated Node Pools

Begin by creating dedicated node pools within each GKE cluster. These node pools are optimized for backend workloads and ensure that your applications requiring database access have the necessary resources. - Provisioning Cloud SQL

Set up a PostgreSQL database instance in Google Cloud SQL. This instance will serve as the central database for your application’s data.

We have many options for creating this instance: we can use Infrastructure as Code (IAC)/manual steps or gcloud command steps provided below:

gcloud sql instances create postgres-instance \

--project=monirul-test \

--database-version=POSTGRES_13 \

--cpu=2 \

--memory=4GiB \

--root-password="DummyPass765#" \

--availability-type=zonal \

--zone=us-west2-a

- Configuring Service Accounts After creating the service account, you need to assign the Cloud SQL Client role to it. To allow this service account to authenticate and access Cloud SQL, you need to generate a JSON key file.

Procedure to creating the service-account-key Secret from a file:

kubectl create secret generic service-account-key --from-file=key.json=/usr/local/monirul-test-c7a15664f347.json

This command will generate a key file named key.json in your current directory. This key file will be used by the Cloud SQL Proxy and your GKE application to authenticate.

- Deploying the Cloud SQL Proxy

Deploying the Cloud SQL Proxy as a sidecar is a crucial step in connecting your GKE application to a Cloud SQL database securely. Below is a Kubernetes Deployment YAML manifest demonstrating how to deploy the Cloud SQL Proxy container as a sidecar alongside your main application container:

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-app-deployment

annotations:

linkerd.io/inject: enabled

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 50%

maxUnavailable: 0

selector:

matchLabels:

app: test-app

template:

metadata:

labels:

app: test-app

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: dedicated

operator: In

values:

- backend

tolerations:

- effect: NoSchedule

key: dedicated

operator: Equal

value: backend

containers:

- name: test-app

image: asia.gcr.io/monirul-test/test-app-deployment:test-app-1.0.0

ports:

- containerPort: 5000

name: test-app

resources:

limits:

cpu: '1'

memory: '3Gi'

requests:

cpu: '0.5'

memory: '2Gi'

env:

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: test-app-secret

key: DB_PASSWORD

- name: APP_PROJECT_ID

valueFrom:

configMapKeyRef:

name: test-app-config

key: APP_PROJECT_ID

- name: DB_HOST

valueFrom:

configMapKeyRef:

name: test-app-config

key: DB_HOST

- name: DB_USER

valueFrom:

configMapKeyRef:

name: test-app-config

key: DB_USER

- name: APP_PORT

valueFrom:

configMapKeyRef:

name: test-app-config

key: APP_PORT

- name: DB_NAME

valueFrom:

configMapKeyRef:

name: test-app-config

key: DB_NAME

- name: DB_PORT

valueFrom:

configMapKeyRef:

name: test-app-config

key: DB_PORT

- name: DATABASE_MAX_CONNECTIONS

valueFrom:

configMapKeyRef:

name: test-app-config

key: DATABASE_MAX_CONNECTIONS

- name: cloud-sql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.22.0

command:

- '/cloud_sql_proxy'

- '-ip_address_types=PUBLIC'

- '-instances=monirul-test:us-west2:postgres-instance=tcp:0.0.0.0:5432'

- '-credential_file=/var/secrets/cloud-sql/key.json'

resources:

requests:

cpu: '100m'

memory: '128Mi'

limits:

cpu: '200m'

memory: '256Mi'

volumeMounts: # Mount the Secret as a volume

- name: service-account-key

mountPath: /var/secrets/cloud-sql

volumes: # Define the volume that references the Secret

- name: service-account-key

secret:

secretName: service-account-key

This YAML manifest defines a Deployment for your application (“test-app-deployment”) and includes a sidecar container named “cloud-sql-proxy.” The Cloud SQL Proxy handles authentication and encryption for secure connections between your GKE application and the Cloud SQL database.

Ensure you replace the image with your actual image. Once deployed, this configuration allows your GKE application to securely access the Cloud SQL database using the Cloud SQL Proxy.

ConfigMap:

apiVersion: v1

kind: ConfigMap

metadata:

name: test-app-config

data:

APP_PORT: '5000'

APP_PROJECT_ID: monirul-test

DB_NAME: postgres

DB_HOST: localhost

DB_USER: postgres

DATABASE_MAX_CONNECTIONS: '15'

DB_PORT: '5432'

Secret:

apiVersion: v1

data:

DB_PASSWORD: RXhDZXJlODc0Iw==

kind: Secret

metadata:

name: test-app-secret

namespace: test-app

type: Opaque

Service.yaml

apiVersion: v1

kind: Service

metadata:

name: test-app-svc

spec:

ports:

- name: test-app-svc-port

protocol: TCP

port: 5000

targetPort: 5000

The provided configurations are clear and should work for your use case. Just make sure that the actual values you use for your application match the ones you’ve configured in the ConfigMap and Secret.

- Testing and Validation Certainly, testing and validation are essential steps to ensure the GKE to Cloud SQL connection works correctly. Your provided commands for testing and validation look good:

$ k exec -it test-app-deployment-786d4bdd56-2sktd -- sh

Defaulted container "test-app" out of: test-app, cloud-sql-proxy

# curl http://localhost:5000

{"message":"hello world"}

# curl http://localhost:5000/data

[["+60102098121","Monirul","Islam","devops.monirul@gmail.com"]]

These commands will help you test various aspects of your setup, including basic connectivity and data retrieval. Be sure to conduct thorough testing, including edge cases and error scenarios, to ensure the reliability and performance of your GKE to Cloud SQL connection.

The Benefits

Connecting multiple GKE VPC-native clusters to a centralized Cloud SQL instance provides several advantages:

Isolation: Each GKE cluster remains isolated within its own VPC network, enhancing security and separation of workloads.

Centralized Data Management: Data consistency is ensured with a single Cloud SQL database instance, simplifying data management.

Scalability: This architecture can scale horizontally to handle increased workloads and data storage requirements.

Security: Strong access controls, encryption, and secure connections guarantee data confidentiality and integrity.

Efficiency: The Cloud SQL Proxy streamlines database connections, reducing latency and ensuring reliable access.

Conclusion

Connecting multiple GKE VPC-native clusters to a shared Cloud SQL instance is a critical step in building scalable, secure, and efficient cloud-native applications. With the right architecture, tools, and best practices, you can seamlessly manage your data while maintaining network isolation and data security.

Reference:

https://cloud.google.com/sql/docs/mysql/connect-kubernetes-engine